through the Lens of Visual Realms

TL;DR

New Benchmark

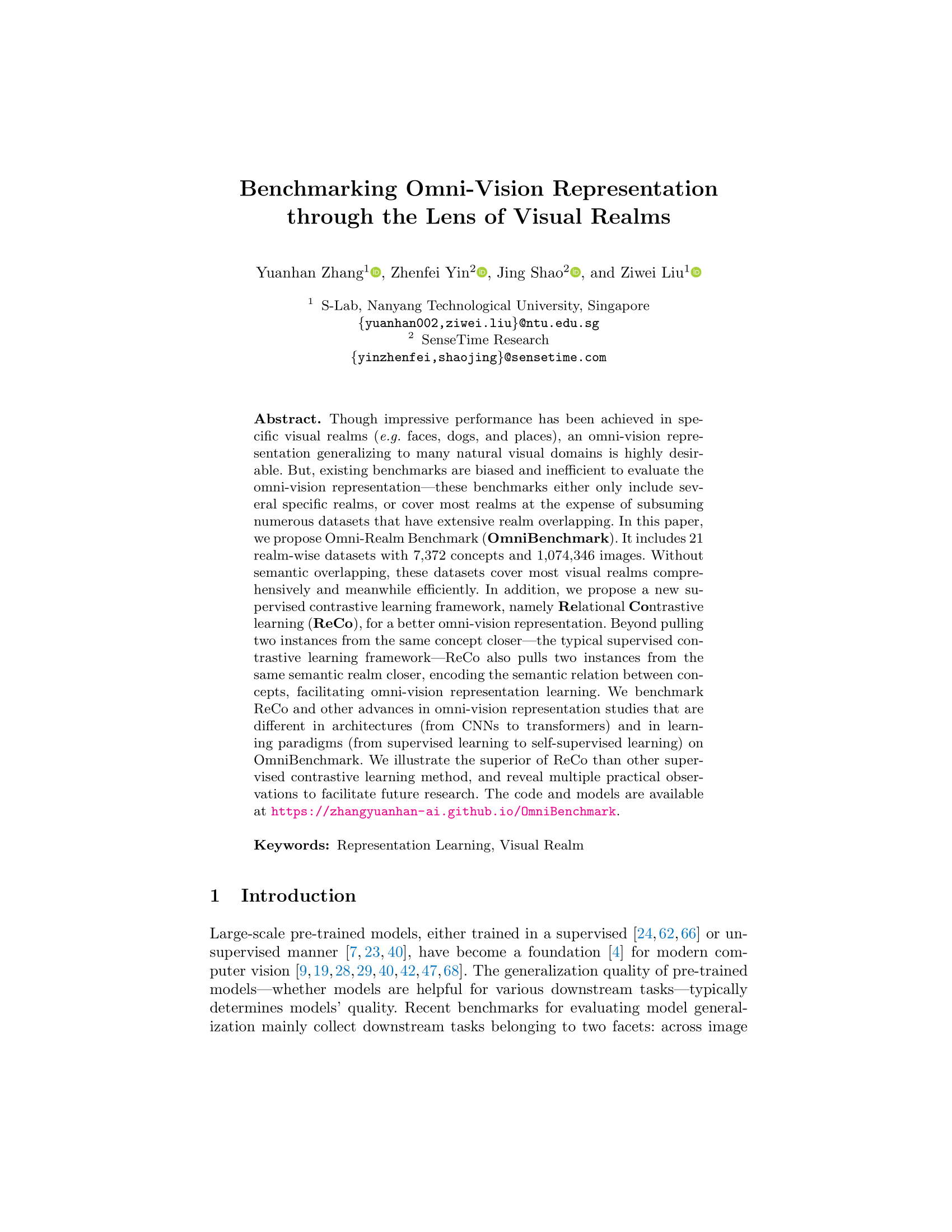

Omni-Realm Benchmark (OmniBenchmark) is a diverse (21 semantic realm-wise datasets) and concise (realm-wise datasets have no concepts overlapping) benchmark for evaluating pre-trained model generalization across semantic super-concepts/realms, e.g. across mammals to aircraft.

New Supervised Contrastive Learning Framework

We introduce a new supervised contrastive learning framework, namely Relational Contrastive (ReCo) learning, that aims to be better suited for omni-vision representation.

Abstract

Though impressive performance has been achieved in specific visual realms (\eg faces, dogs, and places), an omni-vision representation generalizing to many natural visual domains is highly desirable. But, existing benchmarks are biased and inefficient to evaluate the omni-vision representation---these benchmarks either only include several specific realms, or cover most realms at the expense of subsuming numerous datasets that have extensive realm overlapping. In this paper, we propose Omni-Realm Benchmark (OmniBenchmark) It includes 21 realm-wise datasets with 7,372 concepts and 1,074,346 images. Without semantic overlapping, these datasets cover most visual realms comprehensively and meanwhile efficiently. In addition, we propose a new supervised contrastive learning framework, namely Relational Contrastive learning (ReCo), for a better omni-vision representation. Beyond pulling two instances from the same concept closer---the typical supervised contrastive learning framework---ReCo also pulls two instances from the same semantic realm closer, encoding the semantic relation between concepts, facilitating omni-vision representation learning. We benchmark ReCo and other advances in omni-vision representation studies that are different in architectures (from CNNs to transformers) and in learning paradigms (from supervised learning to self-supervised learning) on OmniBenchmark. We illustrate the superior of ReCo to other supervised contrastive learning methods, and reveal multiple practical observations to facilitate future research.

Paper

Benchmarking Omni-Vision Representation through the Lens of Visual Realms

Yuanhan Zhang, Zhenfei Yin, Jing Shao and Ziwei Liu

In ECCV, 2022.

@InProceedings{zhang2022omnibenchmark,

title = {Benchmarking Omni-Vision Representation through the Lens of Visual Realms},

author = {Yuanhan Zhang, Zhenfei Yin, Jing Shao, Ziwei Liu},

booktitle = {European Conference on Computer Vision (ECCV)},

year = {2022},

}Acknowledgements

This work is supported by NTU NAP, MOE AcRF Tier 2 (T2EP20221-0033), and under the RIE2020 Industry Alignment Fund – Industry Collaboration Projects (IAF-ICP) Funding Initiative, as well as cash and in-kind contribution from the industry partner(s).